3 Performance Tuning Tips For ElasticSearch

Over the last year, we’ve run into three main tuning scenarios where the defaults were not sufficient. This was primarily due to data growth vs request load. Check them out to avoid any unwanted system crashes.

Field Data (Cache.. sort of)

One thing you have to be aware of is the concept of Field Data. In most of the ES docs, it’s referred to as a cache but ES developers will tell you that’s not the right way to think about it. This is mainly because if your data exceeds this memory set, you will see degraded performance as disk reads will ensue.

Because ES devs firmly believe this is a requirement rather than a cache, they leave this setting indices.fielddata.cache.size unbounded by default. In other words, you will see your JVM run out of memory if you don’t set this value to something less than your -xmx heap param.

We saw a signifant degradation in faceting (now aggregations) performance when we were evicting field data entries. Effectively we were preventing OutOfMemoryException’s from happeneing, but performance took a huge hit.

How we do it

We keep a very close eye on how much Field Data is being used in our clusters but we also do capacity planning. Below is our methodology:

- We take the sum of all data node JVM heap sizes

- We allocate 75% of the heap to

indices.fielddata.cache.size - As our data set grows, if the utilization starts approaching that 75% barrier, we will add additional data nodes to spread the data and therefore the cache utilization horizontally.

Circuit Breakers

ElasticSearch is providing more capabilities w/r/t preventing your cluster from experiencing an OutOfMemoryException. It’s quite nice actually, you just need to understand the default values for these new settings and how they relate to existing overridden settings you may have set.

For us, since we specify indices.fielddata.cache.size, we also need to be aware of indices.breaker.fielddata.limit. It defaults to 60% which means our cluster will start throwing exceptions even though there is technically plenty of memory on the box to serve the request. Remember, we have our field data size set to 75%, well above the default circuit breaker limit.

We found this out the hard way because we started seeing exceptions in our logs that looked like this:

1 2 | |

ES provides some great docs here about this topic specifically: [http://www.elasticsearch.org/guide/en/elasticsearch/guide/current/_limiting_memory_usage.html#circuit-breaker]

How we do it

Specifying the indices.breaker.fielddata.limit setting in the elasticsearch.yml to 85%. This gives us some wiggle room above our field data cache limit of 75%.

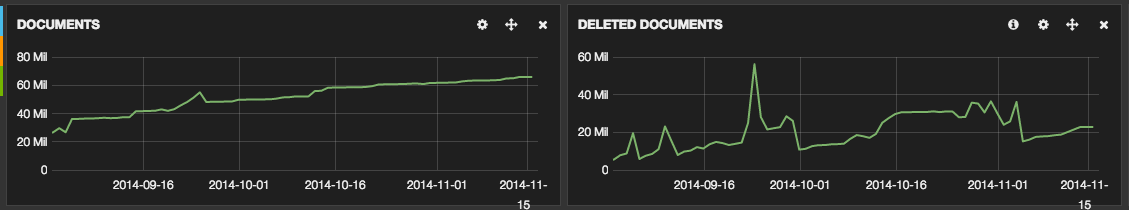

Deleted Documents

One thing you have to be careful of (and isn’t immediately obvious without a tool like Marvel) is the amount of deleted documents you have sitting around in your cluster. The reason you want to pay attention to this is it consumes disk space. If you’re sitting on SSD’s in AWS like us, this is something you probably want to monitor as SSD space is relatively expensive.

A deleted document can of course be the result of actually performing a DELETE against the ES API. But more likely, it’s a result of simply updating an existing document. I won’t go in too much detail here, but effectively because ES uses immutable segment files, every update rewrites the entire document, leaving older versions in other segment files. This increases the deleted document count.

The number of deleted documents is mainly driven by a ratio to total (non-deleted) documents in the system. The more documents you add to your cluster, the more deleted documents will be permitted to linger in older segment files.

The ratio can be tuned by specifying index.merge.policy.reclaim_deletes_weight. Increasing this weight will cause more aggressive merges to take place.

Unforuantely, we’re using version 1.3.2 of ES and there is a bug present that prevents this setting from being respected.

Other

We put together a more in depth presentation around the issues we’ve faced building out a CRM on top of ElasticSearch here: